Data Platform

Run Apache Spark™ Code with the Snowflake Engine.

Modernize your Apache Spark Workloads with Spark Connect for Snowpark

Talk to our Experts

Accelerate Spark Workflows by Tapping Into the Power of the Snowflake Engine

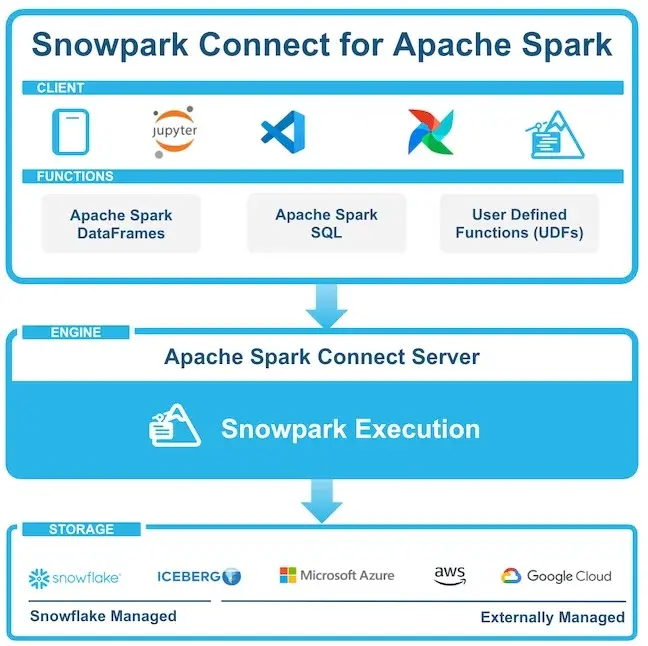

Snowflake now supports Apache Spark Connect for Snowpark, allowing organizations to run Spark DataFrame, Spark SQL, and UDF code directly on the Snowflake compute engine—with no need to manage separate Spark infrastructure. Whether your data resides in Snowflake, Apache Iceberg (internally or externally managed), or cloud object storage, your existing Spark workflows can now run seamlessly within Snowflake’s secure and performant environment.

Infostrux can help your data teams adopt this capability to reduce operational complexity, lower costs, and accelerate innovation—while maintaining enterprise-grade governance.

.png)

Key Benefits of Apache Spark Connect for Snowpark

Lower TCO with Unified Compute

Running Apache Spark code directly on Snowflake eliminates the need for dedicated Spark clusters. This reduces infrastructure overhead, simplifies maintenance, and lets teams focus on delivering business value—not managing infrastructure.

Faster Migration and Development

Spark Connect provides compatibility with familiar Spark APIs—DataFrame, SQL, and UDFs—enabling teams to quickly migrate existing pipelines, test new use cases, and build future-proof solutions.

Enterprise-Grade Governance

Leverage Snowflake’s built-in data governance framework to manage access, lineage, and compliance across Spark workloads—ensuring your governance policies extend across all stages of the data engineering lifecycle.

How It Works

Spark Connect lets customers run Apache Spark code through their preferred tools—such as Snowflake Notebooks, Jupyter, VSCode, Apache Airflow, or Spark Submit—while Snowflake handles the compute. This enables seamless execution across Snowflake-managed storage and external environments like Iceberg and cloud object storage, with no additional cluster provisioning or scaling logic required.

Why It Matters

Organizations that rely on Apache Spark can now unify their analytics, engineering, and machine learning workflows within Snowflake. By running Spark code natively on the Snowflake platform, teams gain:

-

Operational simplicity with fewer moving parts

-

Reduced infrastructure costs

-

Faster time-to-value for new pipelines

-

Consistent governance and security

Get Started with Infostrux

As a Snowflake Elite Services Partner, Infostrux is uniquely positioned to help you take full advantage of Spark Connect for Snowpark. Whether you're migrating existing Spark workloads or building new ones, our team will help you design a strategy that delivers value fast—with less risk and greater scalability.

Get in touch

.png)

Webinar Details

Topic

Dangers of Homogeneous Sampling: How Your Data May Be Telling You the Wrong Story

Date & Time

January 16th, 2024 @ 11:30 pm

Format

Panel discussion + Q&A

(25 minutes discussion + 10 minutes Q&A)

Cost

Free

Duration

35 Minutes